The SML neural network engine. More...

Enumerations | |

| enum | sml_ann_activation_function { SML_ANN_ACTIVATION_FUNCTION_SIGMOID, SML_ANN_ACTIVATION_FUNCTION_SIGMOID_SYMMETRIC, SML_ANN_ACTIVATION_FUNCTION_GAUSSIAN, SML_ANN_ACTIVATION_FUNCTION_GAUSSIAN_SYMMETRIC, SML_ANN_ACTIVATION_FUNCTION_ELLIOT, SML_ANN_ACTIVATION_FUNCTION_ELLIOT_SYMMETRIC, SML_ANN_ACTIVATION_FUNCTION_COS, SML_ANN_ACTIVATION_FUNCTION_COS_SYMMETRIC, SML_ANN_ACTIVATION_FUNCTION_SIN, SML_ANN_ACTIVATION_FUNCTION_SIN_SYMMETRIC } |

| The functions that are used by the neurons to produce an output. More... | |

| enum | sml_ann_training_algorithm { SML_ANN_TRAINING_ALGORITHM_QUICKPROP, SML_ANN_TRAINING_ALGORITHM_RPROP } |

| Algorithm types used to train a neural network. More... | |

Functions | |

| struct sml_object * | sml_ann_new (void) |

| Creates a SML neural networks engine. More... | |

| bool | sml_ann_set_activation_function_candidates (struct sml_object *sml, enum sml_ann_activation_function *functions, unsigned int size) |

| Set the neural networks activation function candidates. More... | |

| bool | sml_ann_set_cache_max_size (struct sml_object *sml, unsigned int max_size) |

| Set the maximum number of neural networks in the cache. More... | |

| bool | sml_ann_set_candidate_groups (struct sml_object *sml, unsigned int candidate_groups) |

| Set the number of neural network candidates. More... | |

| bool | sml_ann_set_desired_error (struct sml_object *sml, float desired_error) |

| Set the neural network desired error. More... | |

| bool | sml_ann_set_initial_required_observations (struct sml_object *sml, unsigned int required_observations) |

| Set the required number of observations to train the neural network. More... | |

| bool | sml_ann_set_max_neurons (struct sml_object *sml, unsigned int max_neurons) |

| Set the maximum number of neurons in the network. More... | |

| bool | sml_ann_set_training_algorithm (struct sml_object *sml, enum sml_ann_training_algorithm algorithm) |

| Set the neural network training algorithm. More... | |

| bool | sml_ann_set_training_epochs (struct sml_object *sml, unsigned int training_epochs) |

| Set the neural network train epochs. More... | |

| bool | sml_ann_supported (void) |

| Check if SML was built with neural networks support. More... | |

| bool | sml_ann_use_pseudorehearsal_strategy (struct sml_object *sml, bool use_pseudorehearsal) |

| Set the pseudorehearsal strategy. More... | |

| bool | sml_is_ann (struct sml_object *sml) |

| Check if the SML object is a neural network engine. More... | |

The SML neural network engine.

A neural network consists in a set of neurons that are inter-connected and distributed in layers, usually three (Input, hidden and output layers). For every connection between neurons there is a weight associated to it, these weights are initialized randomly with values between -0.2 and 0.2 and adjusted during the training phase, so the neural network output predict the right value.

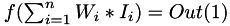

The neuron is the basic unit of the neural network and it's responsible for producing an output value based on its inputs, the output is calculated using the following formula:

Where:

is the index of the input.

is the index of the input.  is the number of inputs.

is the number of inputs.  is the input value for input i.

is the input value for input i.  is the weight value for the input i.

is the weight value for the input i.  is the output value.

is the output value.  is the activation function.

is the activation function. The activation function is chosen by the user and it can be any differentiable function, however most problems can be solved using the Sigmoid function.

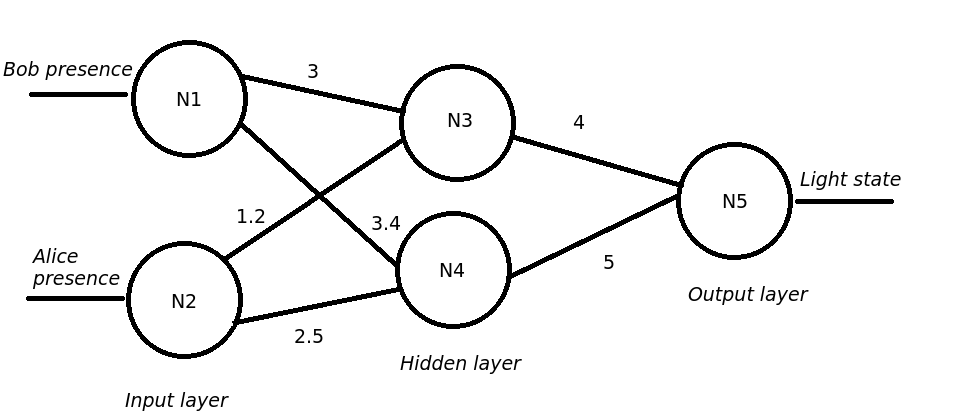

As an example, imagine that one wants to predict if a light should be on/off if Bob and Alice is present in the room using the following trained neural network.

Consider that Bob is present (input is 1) and Alice (input is 0) is not.

The first step is to provide the Bob's and Alice's presence to the input neurons, In a neuron network the input neurons are special, because they do not apply the formula (1) to produce an output. The output value from a input neuron is the input value itself, so in this case the N1 and N2 neurons will output 1 and 0 respectively.

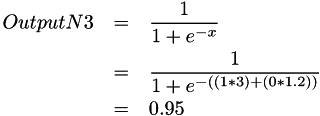

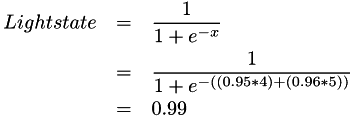

Consider that all neurons are using the sigmoid function defined as:

Using the formula (1) the N3's output will be:

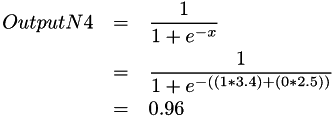

The same thing for N4:

Finally the Light state (N5):

The neural network predict that the light state is 0.99, as it's very close to 1 we can consider that the light should be On.

The example above uses two neurons in the hidden layer, however for some problem, two neurons is not good enough. There is no silver bullet about how many neurons one should use in the hidden layer, this number is obtained by trial and error. SML can handle this automatically, during the training phase SML will automatically choose the neural network topology (how many neurons the hidden layer must have), it will also decide which is the best activation function.

The SML neural network engine has two methods of operation, these methods try to reduce or eliminate a problem called catastrophic forgetting that affects neural networks.

Basically catastrophic forgetting is a problem that the neural network may forget everything that has learnt in the past and only accumulate recent memory. This happens due the nature of their training. In order to reduce this problem the following methods were implemented.

The first method is called pseudo-rehearsal (the default one), in this method only one neural network is created and every time it needs be retrained, random inputs are generated and feed to the network. The corresponding outputs are stored and used to train the neural network with the new collected data.

The second method of operation consists in creating N neural networks, that are very specific for each pattern that SML encounters and every time the SML wants to make a prediction, it will choose which is the best neural network for the current situation. It is possible to set a limit of how many neural networks SML will have in memory, this limit can be set with sml_ann_set_cache_max_size. This cache implements the LRU algorithm so, neural networks that were not recent used will be deleted.

To know more about catastrophic forgetting: https://en.wikipedia.org/wiki/Catastrophic_interference

The functions that are used by the neurons to produce an output.

Algorithm types used to train a neural network.

| struct sml_object* sml_ann_new | ( | void | ) |

Creates a SML neural networks engine.

NULL on failure.| bool sml_ann_set_activation_function_candidates | ( | struct sml_object * | sml, |

| enum sml_ann_activation_function * | functions, | ||

| unsigned int | size | ||

| ) |

Set the neural networks activation function candidates.

Activation functions resides inside the neurons and they are responsible for producing the neuron output value. As choosing the correct activation functions may required a lot of trial and error tests, the SML uses an algorithm that tries to suit the best activation functions for a given problem.

| sml | The sml_object object. |

| functions | The sml_ann_activation_function functions vector. |

| size | The size of the vector functions. |

true on success. false on failure. | bool sml_ann_set_cache_max_size | ( | struct sml_object * | sml, |

| unsigned int | max_size | ||

| ) |

Set the maximum number of neural networks in the cache.

This cache is used to store the neural networks that will be used to predict output values. Setting this limit is only necessary if one sets pseudorehearsal strategy to false, otherwise it's ignored.

| sml | The sml_object object. |

| max_size | The max cache size |

true on success. false on failure.| bool sml_ann_set_candidate_groups | ( | struct sml_object * | sml, |

| unsigned int | candidate_groups | ||

| ) |

Set the number of neural network candidates.

During the training phase the SML will choose the network topology by itself. To do so, it will create (M * 4) neuron candidates (where M is the number of activation function candidates) and then N (where N is the number of candidate groups) candidate groups will be created, ending up with M*4*N candidate neurons. The only different between these candidate groups is the initial weight values.

| sml | The sml_object object. |

| candidate_groups | The number of candidate groups. |

true on success. false on failure.| bool sml_ann_set_desired_error | ( | struct sml_object * | sml, |

| float | desired_error | ||

| ) |

Set the neural network desired error.

This is used as a shortcut to stop the training phase. If the neural network error is equals or below desired_error the train will be stopped.

| sml | The sml_object object. |

| desired_error | The desired error |

true on success. false on failure. | bool sml_ann_set_initial_required_observations | ( | struct sml_object * | sml, |

| unsigned int | required_observations | ||

| ) |

Set the required number of observations to train the neural network.

The SML will only train the neural network when the observation count reaches required_observation. However this number is only a hint to SML, because if SML detects that the provided number is not enough, the required_observations will grow. There is a way to control how much memory the SML will use to store observations, this memory cap can be set with sml_set_max_memory_for_observations

| sml | The sml_object object. |

| required_observations | The number of required observations |

true on success. false on failure. | bool sml_ann_set_max_neurons | ( | struct sml_object * | sml, |

| unsigned int | max_neurons | ||

| ) |

Set the maximum number of neurons in the network.

SML automatically add neurons to the hidden layers when training the neural network, you can prevent the neural network to grow too big by setting the max neurons. Larger networks are more difficult to train, thus required more time. However, smaller networks will have poor predictions.

| sml | The sml_object object. |

| max_neurons | The maximum number of neurons in the neural network. |

true on success. false on failure. | bool sml_ann_set_training_algorithm | ( | struct sml_object * | sml, |

| enum sml_ann_training_algorithm | algorithm | ||

| ) |

Set the neural network training algorithm.

The training algorithm is responsible for adjusting the neural network weights.

| sml | The sml_object object. |

| algorithm | The sml_ann_training_algorithm algorithm. |

true on success. false on failure. | bool sml_ann_set_training_epochs | ( | struct sml_object * | sml, |

| unsigned int | training_epochs | ||

| ) |

Set the neural network train epochs.

The training epochs is used to know when to stop the training. If the desired error is never reached, the training phase will stop when it reaches the training_epochs value.

| sml | The sml_object object. |

| training_epochs | The number of training_epochs |

true on success. false on failure.| bool sml_ann_supported | ( | void | ) |

Check if SML was built with neural networks support.

true If it has neural network support. false If it is has not neural network support. | bool sml_ann_use_pseudorehearsal_strategy | ( | struct sml_object * | sml, |

| bool | use_pseudorehearsal | ||

| ) |

Set the pseudorehearsal strategy.

For more information about the pseudorehearsal strategy look at the Neural_Network_Engine_Introduction

true.| sml | The sml_object object. |

| use_pseudorehearsal | true to enable, false to disable |

true on success. false on failure. | bool sml_is_ann | ( | struct sml_object * | sml | ) |

Check if the SML object is a neural network engine.

| sml | The sml_object object. |

true If it is fuzzy. false If it is not fuzzy.  1.8.6

1.8.6